Understanding Key Technology Trends in 2026: Practical Insights and Use Cases

Outline:

– Edge AI and privacy-preserving intelligence

– Cybersecurity and quantum-safe foundations

– Sustainable computing and resource-aware design

– Next-gen connectivity and real-time systems

– Human-centered interfaces and responsible adoption

Introduction:

The most enduring technology changes arrive quietly, not as spectacle but as better decisions. In 2026, the signal is clearer: progress favors architectures that are close to users, respectful of data, modest in energy appetite, and reliable under pressure. This article translates major shifts into practical moves you can make now, with comparisons that reveal trade-offs and examples you can adapt in the field.

Edge AI in 2026: From Demos to Dependable, On‑Site Intelligence

Edge AI places trained models on devices and nearby gateways instead of sending every request to distant compute. That simple change alters latency, privacy, and cost in your favor. In controlled pilots, teams commonly measure end‑to‑end response in the tens of milliseconds for vision and sensor workloads, enough to catch defects on a line or steer a mobile robot through a narrow aisle. Because raw media stays local, fewer sensitive frames or transcripts ever leave the site, which reduces both exposure and bandwidth charges. At the same time, model optimization techniques—quantization, pruning, and distillation—turn heavyweight networks into compact pipelines that fit within power and memory budgets. Think of it like tuning a musical instrument: the melody is the same, but the instrument is lighter and easier to carry.

The core comparison matters:

– Cloud‑only: maximal elasticity and centralized updates, but higher latency and recurring transfer costs

– Edge‑only: lowest latency and privacy by default, but challenging fleet updates and capacity planning

– Hybrid: local inference for speed, periodic cloud sync for learning and governance

Real‑world patterns show repeatable value. In industrial settings, compact vision models flag surface defects before packaging, cutting rework and downstream waste. In retail, on‑shelf camera analytics keep counts accurate without streaming full video, while tiny audio models monitor refrigeration units for early fault signatures. For mobility, edge classifiers identify road hazards locally, with aggregated insights used to improve global models during scheduled sync windows. Healthcare deployments route simple triage decisions to on‑device models and escalate edge‑cases to centralized review, balancing speed with oversight.

What to watch: deploying many small models creates an operational surface area—versioning, integrity checks, and rollback become weekly concerns, not annual ones. Observability at the edge is different; you will sample telemetry, not stream it all. Fairness and drift also need attention; models grounded in one site’s data can silently pick up local biases. Practical steps:

– Start with a latency‑critical case where bandwidth is expensive or regulated

– Build a model registry with signed artifacts and staged rollouts

– Instrument for small, privacy‑preserving metrics that still reveal performance and drift

– Plan for a safe fallback when the model or device fails

Security Foundations: Zero‑Trust, Automated Defense, and Quantum‑Safe Readiness

Attackers in 2026 automate reconnaissance, chain API weaknesses, and increasingly use synthetic media to pressure humans into hurried approvals. The defensive answer is not a single tool but a posture: verify explicitly, minimize privileges, and monitor continuously. Zero‑trust architecture applies those ideas across identities, devices, networks, and data. In practice that means strong authentication for people and services, short‑lived tokens, micro‑segmented networks, and policy engines that evaluate context before granting access. Telemetry stitched from endpoints, gateways, and applications helps detect lateral movement faster, turning sprawling logs into signals your team can act on.

Cryptography is entering a pivotal migration phase. Quantum‑capable adversaries may one day render today’s public‑key schemes fragile, and the long shelf‑life of sensitive data creates a “store‑now, decrypt‑later” risk. Standards bodies have identified families of algorithms designed to resist quantum attacks, and organizations are starting multi‑year transitions. Sensible sequencing looks like this:

– Build a full inventory of where cryptography lives in your stack (protocols, libraries, devices)

– Introduce crypto‑agility: make it possible to swap algorithms without redesigning systems

– Pilot hybrid approaches that combine classical and quantum‑resistant methods for staged rollout

– Prioritize high‑value data and long‑lived secrets for early migration

Data governance complements technical controls. Classify information by sensitivity and purpose; constrain retention to what is operationally necessary; and apply privacy‑enhancing techniques such as anonymization or noise addition where analytics can tolerate it. For machine learning, prefer designs that keep raw data on site when possible and exchange only model updates or aggregated insights. Metrics make posture real: time to detect, time to respond, and blast radius per incident are practical benchmarks you can improve quarter by quarter. Finally, test incident runbooks under realistic conditions. Tabletop drills and red‑team exercises expose brittle assumptions before a real‑world fault does. The outcome is an environment where access is earned per request, secrets rotate on schedule, and critical services fail safely rather than fail open.

Sustainable Computing: Efficiency, Cooling Choices, and Carbon‑Aware Workloads

Efficiency is no longer a side project; it is a constraint that shapes architecture, budget, and brand reputation. Independent analyses published over recent years estimate that data centers account for roughly one to two percent of global electricity use, with local water footprints that matter in stressed regions. As model sizes and real‑time services grow, so does the responsibility to deliver the same or better outcomes with fewer joules and liters. The good news: many gains come from engineering discipline rather than exotic hardware.

Start with code and workload design. Algorithmic improvements, batched execution, and precision scaling cut compute cycles substantially. Streaming where appropriate reduces memory churn; event‑driven backends sleep when work is idle. Right‑sizing instances avoids the silent penalty of underutilization, and adaptive QoS throttles non‑critical tasks when power is tight. Hardware selection also matters: accelerators and power‑efficient CPUs lower energy per inference or per transaction, especially when models are quantized for the target. On the facility side, airflow management and heat‑recovery designs turn waste into input for building heating. When densities rise, liquid cooling—cold plate or immersion—can shrink overhead energy compared to air‑only designs, though it introduces new considerations around fluid handling and maintenance. A balanced comparison helps:

– Air cooling: simpler operations, broad component compatibility, higher overhead at density

– Cold plate: targeted efficiency, mature supply chain, needs careful sealing and monitoring

– Immersion: standout thermal performance, potential component longevity, specialized procedures

Scheduling adds a powerful lever. Carbon‑aware orchestration shifts flexible jobs to cleaner grid windows, and geo‑aware placement prefers regions with lower marginal emissions for burst capacity. For steady workloads, throttling and model choice by time‑of‑day can preserve experience while trimming peaks. Circularity closes the loop: plan for repairability, component reuse, and certified recycling. Track embodied emissions alongside operational energy, and evaluate vendor take‑back programs before purchase. Make measurement routine:

– Define energy and carbon budgets per service and review them like financial KPIs

– Instrument power at the rack and service level; avoid relying on nameplate values

– Report progress in terms business stakeholders recognize: cost, availability, and risk

Sustainability done this way is not just compliance; it is resilience. Systems that run efficiently generally run cooler, throttle gracefully, and recover faster—traits that pay off when traffic surges, supply chains slip, or weather turns unpredictable.

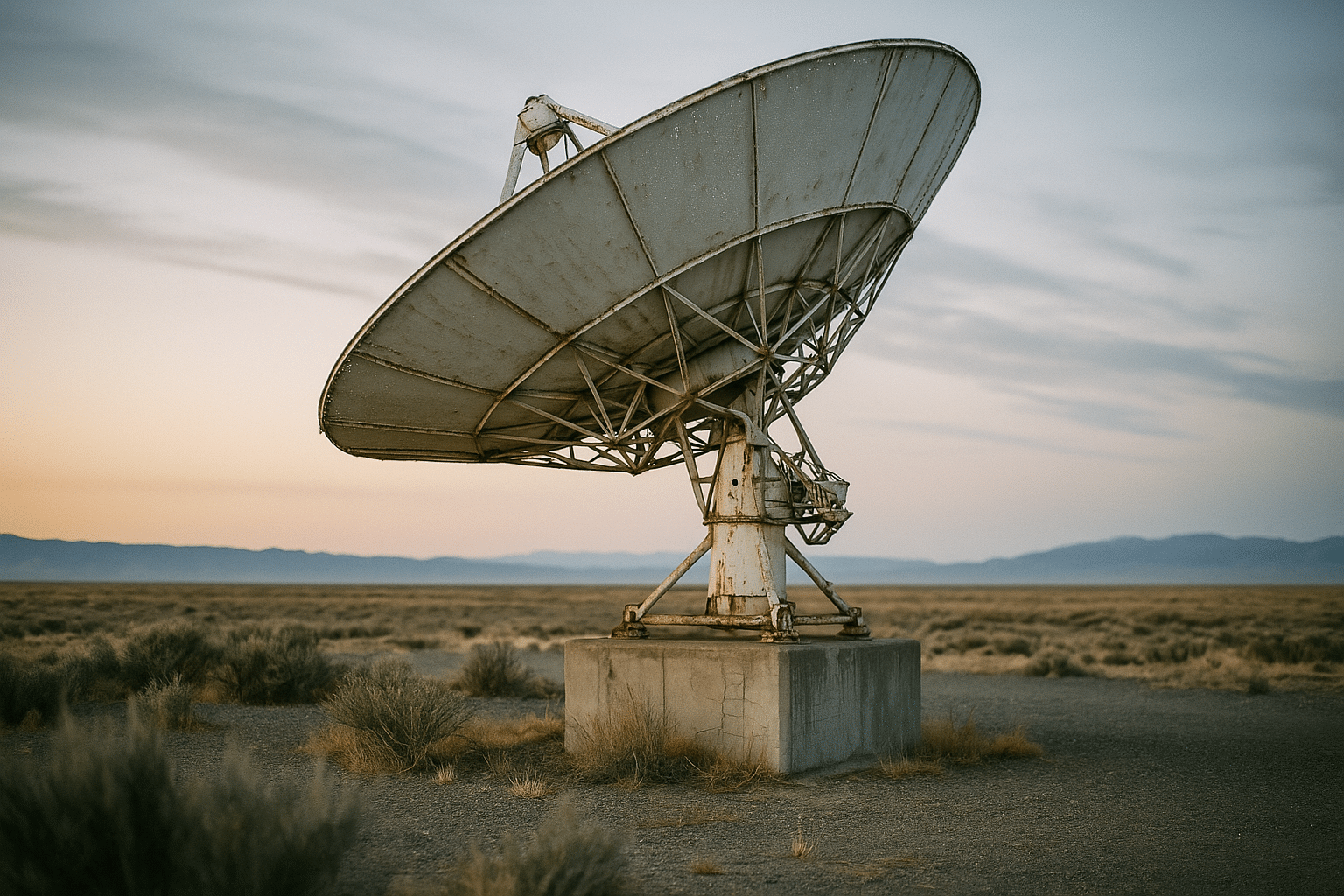

Connectivity Fabric: Private Wireless, Satellites, and Time‑Sensitive Networks

Modern applications depend on predictable timing as much as on raw bandwidth. That is why connectivity in 2026 looks like a fabric woven from multiple threads: local private wireless for mobility, deterministic wired segments for microsecond control, and satellite links for reach beyond terrestrial footprints. Public cellular continues to improve baseline throughput, but enterprises reach for private deployments when they need tailored coverage, interference control, and guaranteed performance in factories, ports, mines, and campuses. In well‑engineered local networks, end‑to‑end latency for control loops can drop to single‑digit milliseconds, enabling coordination among machines, sensors, and edge inference nodes.

Comparisons clarify the design space:

– Public networks: broad coverage, managed upgrades, variable contention in dense areas

– Private wireless: custom radio planning, device admission control, local breakout for edge computing

– Deterministic wired: highly predictable timing for motion control and protection systems

– Satellite: high coverage for remote assets, improving latency, weather and line‑of‑sight constraints

Use cases span industries. In logistics hubs, tagged assets broadcast their position and condition; yard management software synthesizes locations with arrival schedules to avoid congestion. In energy and utilities, ruggedized sensors report status from distant lines and pipelines, with satellite backhaul ensuring the data arrives even after storms. Agriculture sites blend field sensors with drone imagery and local analytics to adjust irrigation in near‑real time. Healthcare campuses carve out secure, interference‑aware channels for telemetry and patient transport coordination. For knowledge workers, the same fabric supports immersive collaboration and spatial interfaces that remain responsive even when multiple users share a space.

Practical steps keep projects grounded. Begin with a site survey and a spectrum plan; map reflective surfaces, moving obstacles, and potential sources of interference. Decide what traffic must stay local for privacy and latency, and what can traverse shared backbones. Design for graceful degradation: when wireless falters, wired fallbacks or buffer strategies should preserve safety. Finally, align device procurement with the plan: choose radios and sensors that can be securely enrolled, updated over time, and measured for health. Connectivity is not a single pipe; it is an ecosystem that, when designed as such, unlocks reliable real‑time behavior.

Human‑Centered Interfaces and Responsible Adoption: Multimodality, Spatial Context, and Trust

Interfaces are expanding from screens and taps to voices, gestures, and spatial anchors. The result is systems that can see, hear, and map the world—useful, but also easy to misuse if rushed. Multimodal assistants can summarize a meeting, reconcile a photo with a part number, or guide a technician through a repair with anchored overlays. Spatial interfaces transform walkthroughs into living manuals and turn room‑scale data into a workspace you can stand inside. These capabilities reward thoughtful scoping: pair narrow intents with clear success criteria, and you get tools that feel like steady colleagues rather than unpredictable magicians.

Comparisons help product teams choose:

– Text‑only: simple pipelines, strong explainability, limited context from the physical world

– Voice and audio: hands‑free efficiency, ambient noise handling requirements

– Vision and spatial: rich guidance in situ, heavier compute and careful occlusion design

– Multimodal orchestration: flexible handoffs across inputs, higher integration effort

Trust is built with process. Label synthetic media clearly. Log prompts, inputs, and outputs for sensitive actions, and establish an escalation path with human review for decisions that affect safety, finance, or access rights. Bias and drift require guardrails: evaluate datasets for representation gaps and track performance by segment, not just global averages. Privacy matters too—process only what is necessary, retain minimally, and give users visible controls. Accessibility is a design constraint, not a feature request: captions, contrast options, and voice alternatives expand your audience and reduce support load. For change management, communicate intent early, run opt‑in pilots with measurable success metrics, and provide training that shows not only how to use new tools but also when to trust or override them.

To move from promise to practice, define governance that fits your organization’s risk profile. Lightweight review councils can unblock teams while still setting boundaries on data use, model updates, and incident response. Track the right outcomes—time saved on a task, error rates in field work, satisfaction among operators—so debates are anchored in evidence. When interfaces respect human limits and contexts, adoption accelerates because the tools feel natural, not imposed.