Exploring Technology: Innovations and tech advancements.

Outline

– Why technology matters now and the forces shaping it

– AI, data, and edge computing working together

– Connectivity, devices, and interoperable ecosystems

– Sustainable computing: energy, materials, and circularity

– Trust, security, and strategy for the road ahead

Foundations and Forces: Why Technology Matters Now

Technology is not a parade of gadgets; it is a system of systems that reorganizes how value is created. When connectivity, computing, data, and interfaces move forward together, familiar activities transform: classrooms become hybrid, factories adapt in real time, farms measure microclimates, and clinics reach patients beyond city limits. More than five billion people now access the internet, and billions of embedded sensors quietly record temperature, vibration, and position. This scale matters because it turns small efficiency gains into sweeping societal shifts. Crucially, progress is uneven: while some sectors sprint ahead, others wrestle with skills gaps, legacy processes, and tight margins.

Understanding the present moment starts with the stack that seeds change. At the base sits hardware—chips, memory, and storage—where improvements still compound, even as classic scaling slows. Above that, cloud and edge resources coordinate workloads; data platforms curate streams into trusted datasets; learning systems find patterns; and interfaces—from voice to augmented visuals—translate insights into action. The greatest leaps come when these layers synchronize. Consider telemedicine blended with wearable diagnostics and secure data exchange: the combination reduces travel, widens access, and enables earlier intervention. Or think about logistics where computer vision counts inventory, route software learns from traffic, and handheld devices guide workers step by step, trimming errors and time.

Why does this matter to leaders, builders, and learners? Because the returns to early understanding compound. Small pilot projects teach teams how to measure outcomes, choose metrics that matter, and iterate quickly. A helpful framing is to compare innovation types:

– Sustaining changes refine what exists, improving cost, reliability, or usability.

– Discontinuous shifts rearrange workflows, roles, and even regulation.

– Convergent moves knit multiple technologies together for outsized effect.

Organizations that scan for convergences—say, computer vision plus robotics plus new materials—often find practical breakthroughs hiding in plain sight. The takeaway is pragmatic: watch the stack, track the convergences, and focus on measurable outcomes rather than hype.

Intelligence Everywhere: AI, Data, and Edge in Concert

Artificial intelligence is most useful when treated as a workflow, not a magic trick. Data must be collected responsibly, labeled where needed, governed for quality, and routed through models that are monitored, retrained, and audited. That lifecycle—often called MLOps in practice—decides whether a prototype quietly stalls or becomes a durable capability. Centralized training in large compute clusters remains common, but inference increasingly happens at the edge: on cameras that check product quality, on vehicles that react to hazards, or on phones that summarize content without sending everything upstream. The reason is straightforward: lower latency, lower bandwidth costs, and tighter privacy.

Choosing where to run intelligence is a trade-off among several constraints:

– Latency: milliseconds matter for safety systems and interactive interfaces.

– Privacy: sensitive images or health signals may never leave the device.

– Bandwidth: streaming raw video is expensive; summaries are cheaper.

– Reliability: local inference keeps working during network outages.

– Energy: on-device accelerators sip power compared with round trips to data centers.

In many deployments, a hybrid pattern wins: compact models perform first-pass judgments locally, and edge gateways batch only the ambiguous cases for deeper cloud analysis. Techniques such as model distillation, quantization, and pruning help shrink models without gutting performance, and hardware support for low-precision math accelerates results.

Use cases span industries. In manufacturing, vision models spot surface defects earlier than manual inspection, reducing rework and scrap. In retail spaces, anonymous presence detection guides staffing without storing identifiable images. In agriculture, sensor fusion blends moisture, leaf imagery, and forecast data to fine-tune irrigation, saving water while protecting yield. Healthcare teams use natural-language systems to draft summaries, which clinicians verify and correct, reclaiming minutes that add up to hours each week. Across them all, guardrails are essential: data lineage must show where inputs came from, model evaluation should track fairness and drift, and human review needs a clear override. Rather than aim for sweeping autonomy, many teams prioritize a loop in which models recommend, people decide, and the system learns, raising trust and outcomes together.

Connected Infrastructure: Networks, Devices, and Interoperability

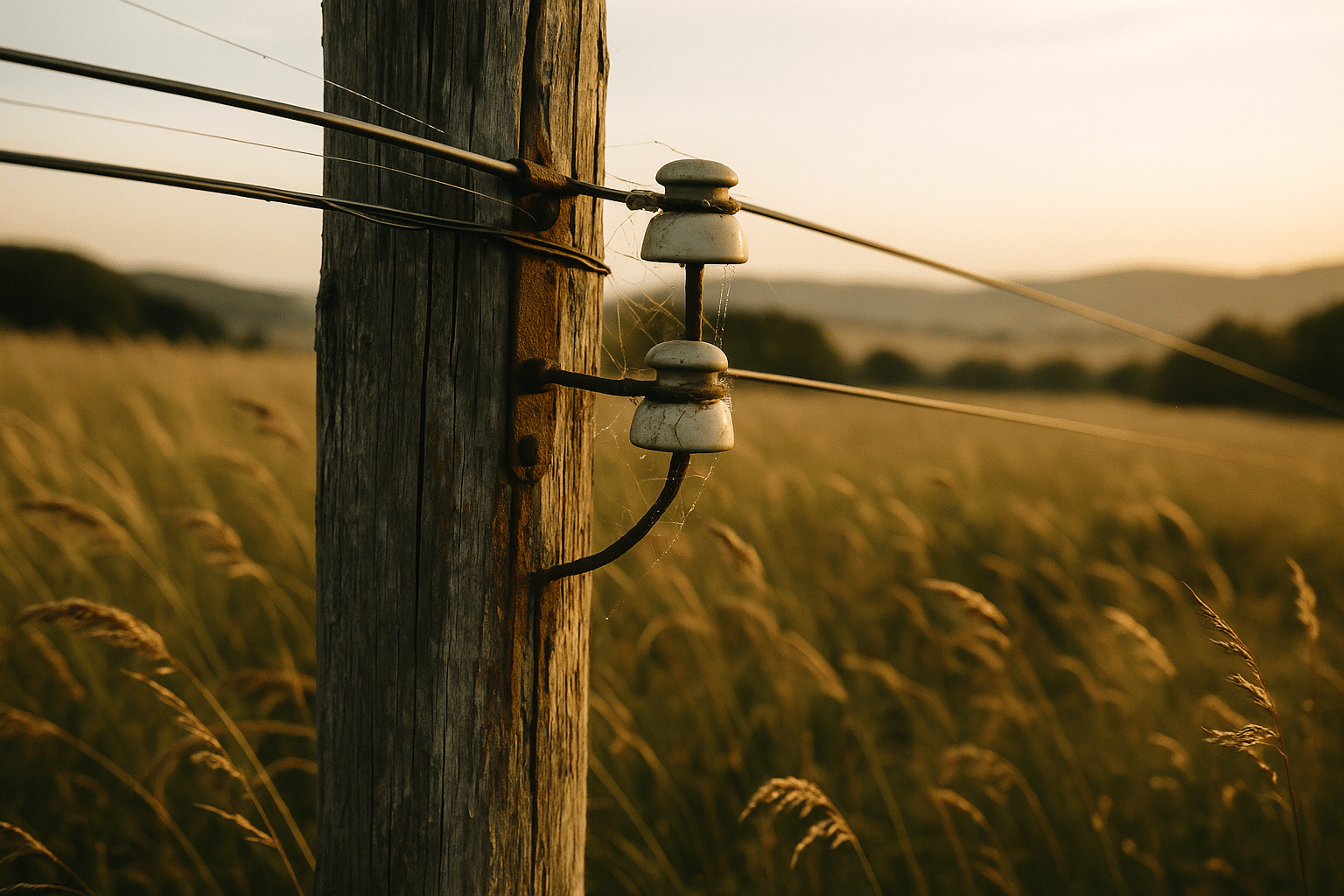

Connectivity is the circulatory system of modern technology. Fiber backbones, next‑generation cellular networks, and evolving Wi‑Fi standards together shape what experiences are possible. Typical consumer links now deliver hundreds of megabits per second, with lower latency improving voice, gaming, and remote work. Industrial sites often blend private cellular for wide coverage with deterministic wired links on critical lines. At the far edge, ultra‑low‑power radios let battery‑operated sensors run for years, reporting temperature, occupancy, or equipment status without frequent maintenance. This mosaic works when each layer is chosen for the job it does best.

Designers weigh performance across several axes:

– Bandwidth: how much data must move per second.

– Latency and jitter: how variable the timing can be before users notice.

– Coverage: whether the link must cross a warehouse, a campus, or a city.

– Power: what the device can afford in energy budget.

– Security: how identities are proven and traffic is protected end to end.

A video analytics system might compress frames and push only metadata; a robotic cell might rely on wired links to keep control loops stable; a wildlife sensor might wake, transmit a few bytes, and sleep. Interoperability ties it together: open protocols, well-documented APIs, and consistent schemas prevent lock‑in and ease future upgrades. When devices agree on how to describe location, time, and status, dashboards assemble themselves with fewer brittle adapters.

Consider a supply chain scenario. Pallets carry tags that record shocks and temperature; gateways on loading docks forward summaries when trucks arrive; a route service merges traffic and weather; and a planning tool reprioritizes deliveries if conditions change. Warehouses track shelf positions with indoor positioning and count stock via overhead cameras. The win is not any single component, but the handshake among them. Compared to a siloed setup with manual updates, connected infrastructure shrinks inventory variance, cuts spoilage, and gives operations teams a shared source of truth. As networks and devices continue to mature, the emphasis shifts from raw speed to reliability, observability, and graceful degradation—designing systems that fail softly and recover quickly.

Sustainable Computing: Energy, Materials, and Circular Design

As digital demand rises, so does the responsibility to run it efficiently. Data centers already consume roughly one to two percent of global electricity, and new applications—AI training, high‑fidelity streaming, immersive interfaces—can push usage higher if unmanaged. Efficiency is a system property, not just a data center metric. It starts with code that avoids unnecessary work, models that are right‑sized, and requests that are batched when interactive speed is not required. It extends to hardware that accelerates common tasks and shifts lightly loaded servers into deeper sleep states. Cooling deserves special attention: free‑air designs, liquid loops for dense racks, and hot‑aisle containment are practical tools, and some facilities even recover waste heat for nearby buildings.

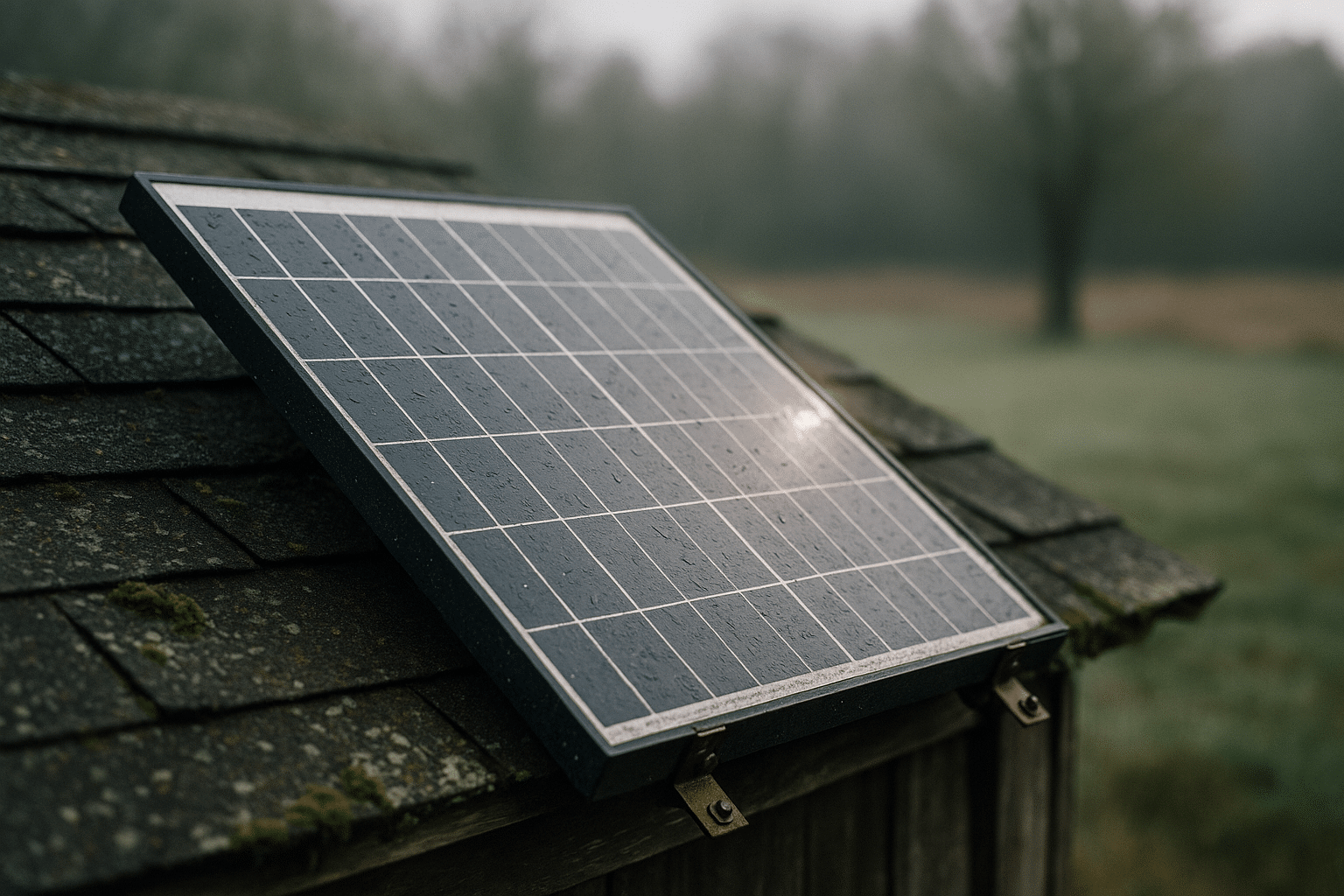

Cleaner energy supply is part of the picture, but timing matters too. Aligning non‑urgent jobs with periods of abundant wind or solar lowers both emissions and cost. Queueing AI training runs overnight or when renewable forecasts surge can make a meaningful dent. On the device side, energy‑aware radios and efficient codecs keep batteries small and lifetimes long. Material choices also play a growing role. Electronics rely on specialized alloys and elements, and expanding output without stewardship risks supply strain and environmental harm. Design for disassembly, modular components, and standardized fasteners make repairs less costly and extend useful life.

Practical steps organizations can take include:

– Measure first: track workload energy, cooling performance, and carbon intensity of the grid.

– Optimize models: prune parameters, cache results, and prefer retrieval over recomputation.

– Shift workloads: schedule flexible tasks to align with cleaner power windows.

– Extend device life: favor repairable enclosures, socketed storage, and replaceable batteries.

– Close the loop: refurbish, resell, and responsibly recycle at end of life.

E‑waste already totals tens of millions of tonnes annually; stretching lifetimes by even a year produces outsized benefits. A sustainable approach is not only an environmental commitment; it is also operational discipline. Systems that use less energy, shed less heat, and recover gracefully from faults are generally more dependable and more economical to run.

Trust, Security, and Strategy: Preparing for What’s Next

As technology permeates every process, trust becomes a prerequisite for adoption. Attackers probe where connectivity and convenience grow fastest: phishing adapts to new interfaces, ransomware targets operational bottlenecks, and supply‑chain compromises move upstream into shared libraries and firmware. A practical defense treats identity as a first‑class control, encrypts data in transit and at rest, and segments networks so issues remain contained. Zero‑trust principles—verify explicitly, limit privileges, and assume breach—aren’t a silver bullet, but they do narrow blast radius and shorten investigations. On the privacy front, data minimization, consent baked into flows, and techniques such as anonymization or differential privacy help reduce risk while preserving utility.

Governance matters as much as tooling. Teams should document what a system is intended to do, how it was evaluated, and how users can contest outcomes. Clear model cards and data lineage records foster dialog with auditors, customers, and partners. Observability closes the loop: metrics for accuracy, bias, drift, latency, and cost inform whether a model still earns its keep. When performance drifts, rollback plans and human review prevent small issues from compounding. This discipline encourages humility—systems describe probabilities, not certainties—and gives users a way to escalate edge cases rather than silently working around them.

For leaders planning the road ahead, a staged approach helps:

– Near term: inventory critical processes, map dependencies, and pilot narrowly scoped projects with success metrics.

– Mid term: invest in shared data models, platform capabilities, and training so teams stop rebuilding the same scaffolding.

– Long term: track emerging materials, quantum‑safe cryptography, and new interface paradigms to avoid dead ends.

The conclusion is practical and people‑centered. Skills compound when teams practice together; curiosity grows when wins are shared; resilience improves when systems are transparent. Whether you are a student exploring career paths, a professional refining a product, or a policymaker shaping guardrails, the same guidance applies: start small, measure honestly, build for recovery, and keep humans in the loop. Technology rarely changes everything overnight, but steady, well‑governed progress adds up—quietly at first, then all at once.